Azure API Management for Microservices

Azure API Management (APIM) simplifies handling microservices by centralizing tasks like authentication, traffic management, and security. It acts as a bridge between your APIs and their consumers, reducing duplication and ensuring consistency across services. With features like an API gateway, developer portal, and policies for routing and caching, APIM supports seamless scaling and secure operations. It processes over 3 trillion requests monthly for 35,000+ customers, making it a reliable choice for managing APIs at scale.

Key highlights:

- Traffic Management: Advanced routing, caching, and circuit breakers.

- Security: OAuth 2.0, mTLS, and integration with Azure Key Vault.

- Developer Tools: Self-service portal, versioning, and CI/CD support.

- Flexibility: Serverless options (Consumption tier) and hybrid deployments via self-hosted gateways.

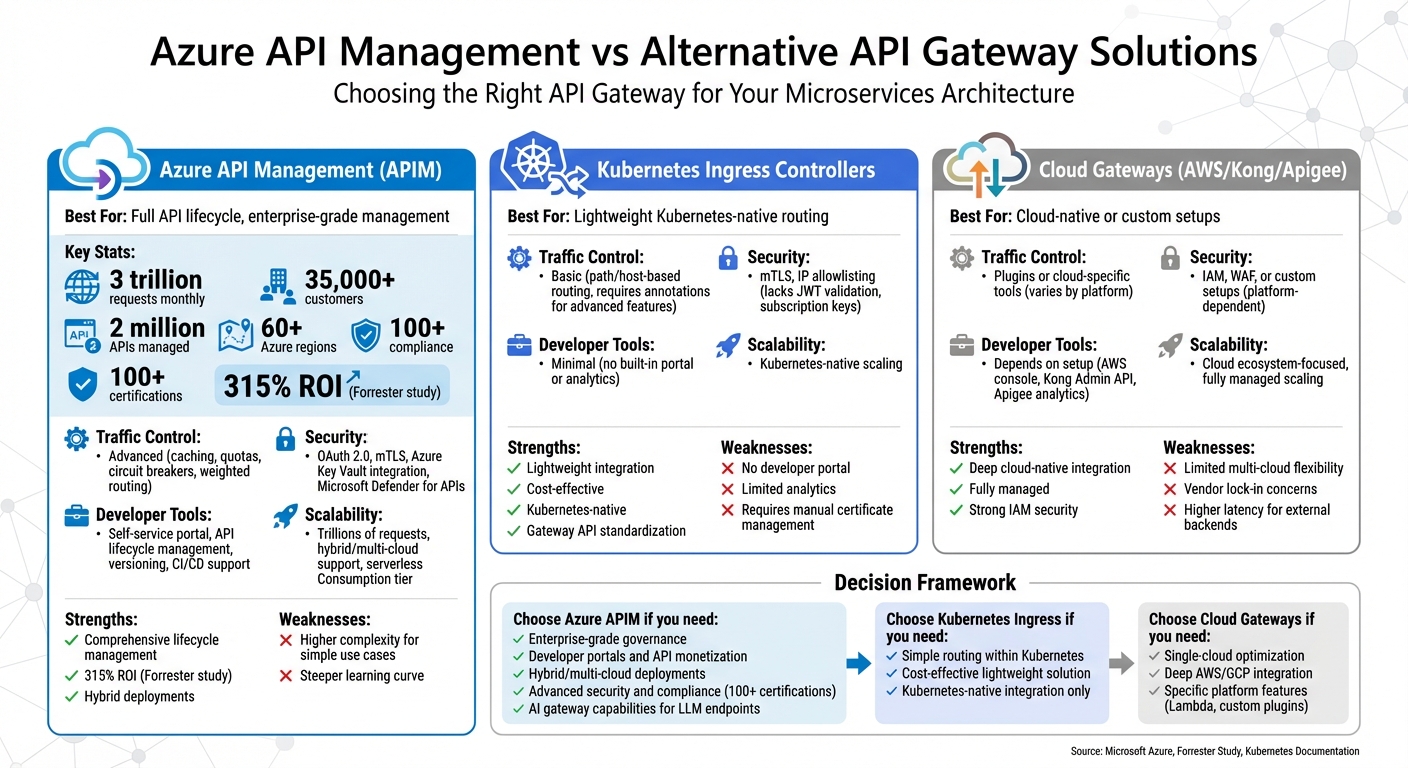

APIM stands out for complex API environments, while Kubernetes Ingress Controllers are better for lightweight routing within Kubernetes clusters. Other cloud gateways like AWS API Gateway or Kong offer tailored solutions but often lack the integration and governance of APIM.

Quick Comparison

| Feature | Azure API Management (APIM) | Kubernetes Ingress Controllers | AWS API Gateway / Kong |

|---|---|---|---|

| Best For | Full API lifecycle, enterprise-grade | Lightweight Kubernetes routing | Cloud-native or custom setups |

| Traffic Control | Advanced (caching, quotas, policies) | Basic (path/host-based routing) | Plugins or cloud-specific tools |

| Security | OAuth 2.0, mTLS, Key Vault | mTLS, IP allowlisting | IAM, WAF, or custom setups |

| Developer Tools | Portal, analytics, API lifecycle | Minimal | Depends on setup |

| Scalability | Trillions of requests, hybrid support | Kubernetes-native scaling | Cloud ecosystem-focused |

For businesses using Azure or managing complex microservices, APIM provides a robust solution to streamline operations and enhance API governance.

Azure API Management vs Kubernetes Ingress vs Cloud Gateways Comparison

Azure API Management Deep Dive

1. Azure API Management

Azure API Management tackles common microservices challenges like repetitive security and routing tasks by centralizing these processes. Positioned as a fully managed platform, it acts as a bridge between your microservices and their consumers. It handles key tasks such as authentication, rate limiting, caching, and logging. Impressively, the platform currently manages around 2 million APIs and operates across more than 60 Azure regions.

Core Components

The platform is built around several essential components:

- API Gateway: Validates incoming requests and routes traffic to the appropriate services.

- Management Plane: Supports API definitions using standards like OpenAPI, GraphQL, and gRPC.

- Developer Portal: Provides self-service access for developers.

- Workspaces: Enables teams to manage APIs under a unified governance model.

This setup allows platform teams to oversee infrastructure while giving development teams the freedom to deploy and configure their services independently.

Routing and Traffic Management

Azure API Management serves as a facade, separating clients from backend systems. According to Microsoft:

"The API gateway acts as a facade to the backend services, allowing API providers to abstract API implementations and evolve backend architecture without impacting API consumers".

This design means backend microservices can be refactored, relocated, or replaced without affecting client applications.

Traffic management is handled through backend entities and policies. Backend pools support routing strategies like round-robin, weighted routing (useful for blue-green deployments), and priority-based routing. A built-in circuit breaker monitors backend health, automatically returning a 503 Service Unavailable status if a service repeatedly fails - for example, after a specific number of 5xx errors within a defined timeframe. Dynamic caching reduces the load on microservices by storing frequently requested responses, while throttling and quotas ensure no single client overwhelms the system.

Security and Compliance

Security is a cornerstone of Azure API Management. It supports OAuth 2.0, OpenID Connect, and JWT validation to verify user identities and claims. For enhanced security, the gateway can operate in Internal mode within an Azure Virtual Network, avoiding public exposure. Additionally, mutual TLS (mTLS) enables certificate-based authentication between the gateway and backend services.

The platform integrates with Azure Key Vault for securely storing certificates and secrets, which can be accessed via "named values" in the API Management instance. For runtime security, it works alongside Microsoft Defender for APIs and Azure DDoS Protection, guarding against OWASP Top 10 vulnerabilities and other malicious attacks. Azure also boasts over 100 compliance certifications, with more than 50 tailored to specific global regions and countries.

Developer Experience

Azure API Management is designed with developers in mind, offering nearly 50 built-in policies to simplify tasks like authentication, data transformation, and traffic shaping. The platform includes tools for API lifecycle management, such as versioning and revisions, which allow teams to roll out changes without disrupting existing users.

For microservices with fluctuating traffic, the Consumption tier provides a serverless option that automatically scales based on demand and charges per execution. In hybrid setups, the self-hosted gateway runs as a Docker container and supports APIOps, enabling CI/CD-driven API deployments.

This rich feature set provides a solid foundation for comparing other API gateway strategies.

2. Kubernetes Ingress Controllers

Kubernetes Ingress Controllers act as native entry points for managing how external requests are routed to microservices within a Kubernetes environment. Unlike Azure API Management, which provides a full-lifecycle API management solution, Ingress Controllers are more focused on routing HTTP/HTTPS traffic based on paths and hostnames. They rely on Kubernetes Service abstractions to maintain stable connections to Pods, even as IPs change due to scaling or node updates. This distinction highlights the trade-offs between Kubernetes-native routing and more comprehensive API management solutions.

Core Components

Kubernetes Services group Pods together and provide stable endpoints for discovery and load balancing. Ingress Controllers take on tasks like SSL termination and basic authentication, reducing the burden on individual microservices. For hybrid setups, a self-hosted gateway can integrate local processing with Azure's centralized management capabilities.

Routing and Traffic Management

Ingress Controllers handle basic path- and host-based routing. However, more advanced use cases - like canary deployments or header-based routing - often require vendor-specific annotations, which can limit portability. The Gateway API aims to address these limitations by offering standardized features like traffic splitting, request modification, and gRPC support. This API represents a shift toward more flexible and role-oriented traffic management. For production workloads on Azure Kubernetes Service (AKS), Microsoft recommends using Azure CNI with Cilium for better routing performance through an eBPF-based data plane. These routing capabilities differ significantly from the more advanced traffic management features found in Azure API Management.

Security and Compliance

Ingress Controllers centralize security at the cluster boundary, handling tasks like authentication and authorization upfront. They support mutual TLS (mTLS) for two-way trust between external gateways and the cluster, as well as IP allowlisting to ensure only authorized traffic is allowed. However, they don’t offer application-level security features such as JWT validation, subscription keys, or integration with Microsoft Defender for APIs. To access these advanced capabilities, organizations often deploy Azure API Management in front of the Ingress Controller.

Developer Experience

Operational simplicity is another key consideration when choosing an API gateway. Ingress Controllers require manual installation, certificate management, and upkeep through YAML files or Helm charts. They lack built-in tools like developer portals or API discovery mechanisms because they are primarily designed for managing infrastructure rather than enabling consumer-facing interactions. While they are suitable for straightforward Kubernetes deployments, more complex routing needs often lead to adopting the Gateway API. For organizations requiring centralized API lifecycle management, self-service developer portals, and advanced analytics, Azure API Management is frequently deployed upstream of the Ingress Controller, blending Kubernetes-native routing with enterprise-grade governance.

sbb-itb-79ce429

3. Other Cloud API Gateways

In addition to Azure and Kubernetes-native solutions, several cloud API gateways like AWS API Gateway, Kong, and Apigee are widely used for managing microservices. Each comes with its own strengths and trade-offs.

AWS API Gateway is particularly strong in environments that rely heavily on AWS services. It offers automatic scaling and seamless integration with AWS Lambda, along with robust authentication and authorization through AWS Identity and Access Management (IAM). However, it’s less effective for managing APIs that span multiple clouds or on-premises systems.

Kong, on the other hand, provides extensive customization through plugins for routing, authentication, and rate limiting. This makes it a great choice for organizations with complex microservices needing tailored solutions. But the added flexibility comes at a cost - Kong often requires more manual setup and maintenance, especially in hybrid deployments. Apigee, now part of Google Cloud, focuses on advanced analytics and API monetization features, making it a strong contender for enterprises prioritizing these capabilities. These platforms highlight different approaches compared to Azure’s more integrated ecosystem.

Core Components

Most enterprise-grade gateways share a common architecture built around three core components:

- Data plane: Handles runtime traffic processing.

- Control plane: Manages configuration and policies.

- Developer portal: Facilitates API discovery and documentation.

AWS API Gateway tightly integrates these components within the AWS cloud. Kong, by contrast, supports self-hosted deployments with centralized management for flexibility. Meanwhile, Microsoft's Application Gateway for Containers - a Kubernetes-native Layer 7 load balancer - separates the control and data planes. This design allows for near-instant updates to routes and backend pods without requiring a full gateway redeployment. This separation aligns with Azure API Management's self-hosted gateway, which is optimized for Azure Kubernetes Service (AKS) workloads.

Routing and Traffic Management

Traffic management capabilities vary significantly across platforms. AWS API Gateway excels in request routing and throttling within its ecosystem but can encounter latency issues when backends are outside AWS. Kong relies on plugins for routing, offering flexibility but requiring manual tuning to achieve optimal performance.

Microsoft’s Application Gateway for Containers focuses on high-speed traffic splitting and supports canary deployments using Kubernetes-native configurations. This avoids the need for vendor-specific annotations, which are common in traditional ingress controllers. For teams dealing with fluctuating traffic, Azure API Management’s Consumption tier offers a serverless billing model based on execution, providing cost efficiency for variable workloads.

Security and Compliance

Security is a critical feature for any API gateway, and each platform handles it differently. AWS API Gateway integrates IAM policies with AWS Web Application Firewall (WAF) to deliver robust protection against threats. Kong supports advanced security protocols, such as custom OAuth2 flows and mutual TLS, but requires teams to handle setup and maintenance independently.

Application Gateway for Containers takes a Kubernetes-native approach, integrating with Azure Web Application Firewall through WebApplicationFirewallPolicy resources. Developers can define WAF rules directly in YAML files alongside their application manifests, enabling a "shift-left" security model where policies are embedded early in the development process. Additionally, Azure boasts over 100 compliance certifications, including more than 50 tailored to specific global regions, and employs 34,000 full-time engineers focused on security. This scale is unmatched by smaller gateway providers.

Developer Experience

The developer experience varies widely between these platforms. AWS API Gateway offers a user-friendly console for those familiar with AWS, but its API documentation tools are less flexible compared to Azure’s customizable developer portal. Kong provides powerful configuration options via YAML files or its Admin API, but this flexibility comes with a steeper learning curve.

Microsoft’s Application Gateway for Containers simplifies workflows by leveraging Kubernetes-native patterns. Teams can define routing rules, WAF policies, and backend health checks using standard Kubernetes manifests. Azure further enhances the developer experience with a comprehensive self-service portal for API discovery and automated documentation generation, similar to what Azure API Management offers. This streamlined approach can significantly reduce the time and effort required for API management.

Advantages and Disadvantages

Let’s break down the key advantages and challenges of each platform to help you better understand how they stack up against one another.

Azure API Management (APIM) is designed to handle massive scale, supporting trillions of requests monthly and serving thousands of customers. It offers a full lifecycle management solution, complete with features like integrated developer portals, policy-based transformations, and strong security measures. The Consumption tier is particularly appealing for its serverless scaling, which charges based on execution, making it a cost-effective choice for fluctuating traffic. However, APIM can feel overwhelming for teams that only need basic routing, as its setup is more complex and requires familiarity with policy-based configuration.

Kubernetes Ingress Controllers provide a lightweight, native integration for Kubernetes environments. They are reliable, straightforward, and cost-efficient for routing needs. The Gateway API further enhances these solutions by offering standardized, role-based resources, reducing vendor lock-in concerns. That said, Ingress solutions lack built-in developer portals, advanced analytics, and monetization tools - features that often require additional third-party tools or custom development.

Cloud Gateways, such as AWS API Gateway, excel in delivering fully managed, serverless solutions with seamless integration into their respective cloud ecosystems. These platforms efficiently handle high traffic volumes and offer robust security through IAM-based controls. However, their strengths are often tied to their native environments, which can make multi-cloud or hybrid deployments more challenging. A Forrester study even estimated a 315% ROI for organizations leveraging Azure API Management, showcasing the financial benefits of selecting the right platform.

Here’s a quick comparison of their strengths and weaknesses:

| Solution | Key Strengths | Primary Weaknesses |

|---|---|---|

| Azure API Management | Comprehensive lifecycle management, self-service developer portal, 100+ compliance certifications, hybrid/multi-cloud support via self-hosted gateways | Higher complexity for simple use cases, requires learning policy-based configuration |

| Kubernetes Ingress/Gateway API | Lightweight, Kubernetes-native integration, cost-effective, standardized routing with Gateway API | No built-in developer portal or analytics, lacks advanced developer tools |

| Cloud Gateways (AWS, Apigee) | Fully managed scaling, deep cloud-native integration, strong IAM security | Best suited for single-cloud environments, limited flexibility for hybrid or multi-cloud setups |

Conclusion

Azure API Management (APIM) shines when your microservices architecture requires more than basic routing. If you're building within the Azure ecosystem and need features like integrated security through Microsoft Entra ID, centralized monitoring via Application Insights, or AI gateway capabilities to manage large language model (LLM) endpoints, APIM offers a unified solution. It supports comprehensive lifecycle management and scales to handle trillions of requests each month. These capabilities directly address the operational complexities of modern microservices.

By centralizing critical functions like security, traffic control, and observability, APIM helps mitigate challenges that arise from decentralized microservices. For serverless workloads with unpredictable demands, the Consumption tier provides a cost-effective pay-per-execution model. For enterprises requiring heightened security, deploying APIM in Internal Mode within a Virtual Network ensures private traffic. Additionally, for hybrid and multi-cloud setups, the self-hosted gateway allows centralized management across Kubernetes clusters while maintaining a unified Azure control plane.

While native controllers may suffice for straightforward routing, APIM’s advanced features make it worth the investment. Whether you're scaling across regions, working with diverse protocols like REST, GraphQL, gRPC, or WebSocket, or preparing for AI-driven workloads with token-based quotas and semantic caching, APIM’s extensive capabilities become indispensable.

To speed up your API modernization journey, consider partnering with AppStream Studio. They specialize in accelerating Azure API Management deployments, reducing timelines from months to weeks. Focused on API and event-driven architecture within the Microsoft stack, their senior engineering teams deliver production-ready solutions tailored for mid-market companies in regulated industries. By combining Azure cloud modernization with governed AI automation, they offer a single, accountable team to simplify your vendor relationships.

A Forrester study highlights the transformative potential of APIM, showing a 315% ROI driven by faster API deployment cycles, fewer security incidents, and the ability to modernize legacy systems without risky migrations. Whether you're just starting with a handful of microservices or managing thousands of APIs, APIM scales effortlessly, ensuring the governance and security that enterprise environments demand.

FAQs

How does Azure API Management improve security for microservices?

Azure API Management (APIM) bolsters the security of microservices by serving as a centralized gateway, ensuring consistent protection across all your APIs. It streamlines authentication and authorization through tools like Azure Active Directory, OAuth 2.0, OpenID Connect, and client certificates, eliminating the need for individual microservices to handle their own security logic. Additionally, APIM allows you to enforce runtime policies such as rate limiting, IP allowlists, and request validation, effectively stopping unauthorized or harmful traffic before it reaches your backend systems.

To further tighten security, APIM offers network isolation options. You can use private endpoints or deploy it within a Kubernetes cluster, ensuring that only approved traffic gains access to your APIs. Features like mutual TLS (mTLS) and integration with Azure Key Vault provide extra encryption and secure secret management. These capabilities make it easier to meet compliance requirements for standards like HIPAA and PCI-DSS.

With its built-in observability tools, including logging and diagnostics, APIM helps you quickly identify and address potential threats. By centralizing all these security measures into one platform, APIM simplifies the process of protecting your microservices architecture, eliminating the need to scatter security controls across individual services.

What’s the difference between Azure API Management and Kubernetes Ingress Controllers?

Azure API Management (APIM) serves as a fully managed API gateway, purpose-built to oversee the entire API lifecycle. It comes packed with features like centralized API design, versioning, security policies (including OAuth, JWT validation, and rate limiting), data transformation, analytics, and a developer portal. APIM operates smoothly across hybrid and multi-cloud setups, making it a strong choice for businesses needing enterprise-level API governance and monitoring.

Kubernetes Ingress Controllers, on the other hand, are lightweight routing tools designed for Kubernetes environments. They focus on HTTP(S) traffic routing to services within a cluster and provide basic functionalities like TLS termination and load balancing. While they are effective for simple traffic management, they lack the advanced API management features APIM offers, such as built-in policy engines or tools for engaging developers.

To put it simply, APIM is ideal for businesses that need end-to-end API management, while Ingress Controllers are better suited for basic traffic routing within Kubernetes clusters.

What makes Azure API Management ideal for handling high-volume API requests?

Azure API Management is built to manage high volumes of API traffic efficiently, delivering reliable, secure, and scalable performance for enterprise needs. Features such as global gateways, rate limiting, caching, and traffic flow optimization ensure smooth handling of large request loads without compromising speed or functionality.

It also includes powerful observability tools, offering detailed insights into API usage and performance. These tools make it easier to monitor and fine-tune your microservices architecture. Plus, with automatic scaling, Azure API Management adapts to dynamic workloads, ensuring your APIs are always prepared to handle demand effectively.